ixAutoML

Interaktive und erklärbare, menschenzentrierte AutoML - AutoML-Systeme werden durch Interaktivität und Erklärbarkeit menschenzentrierter.

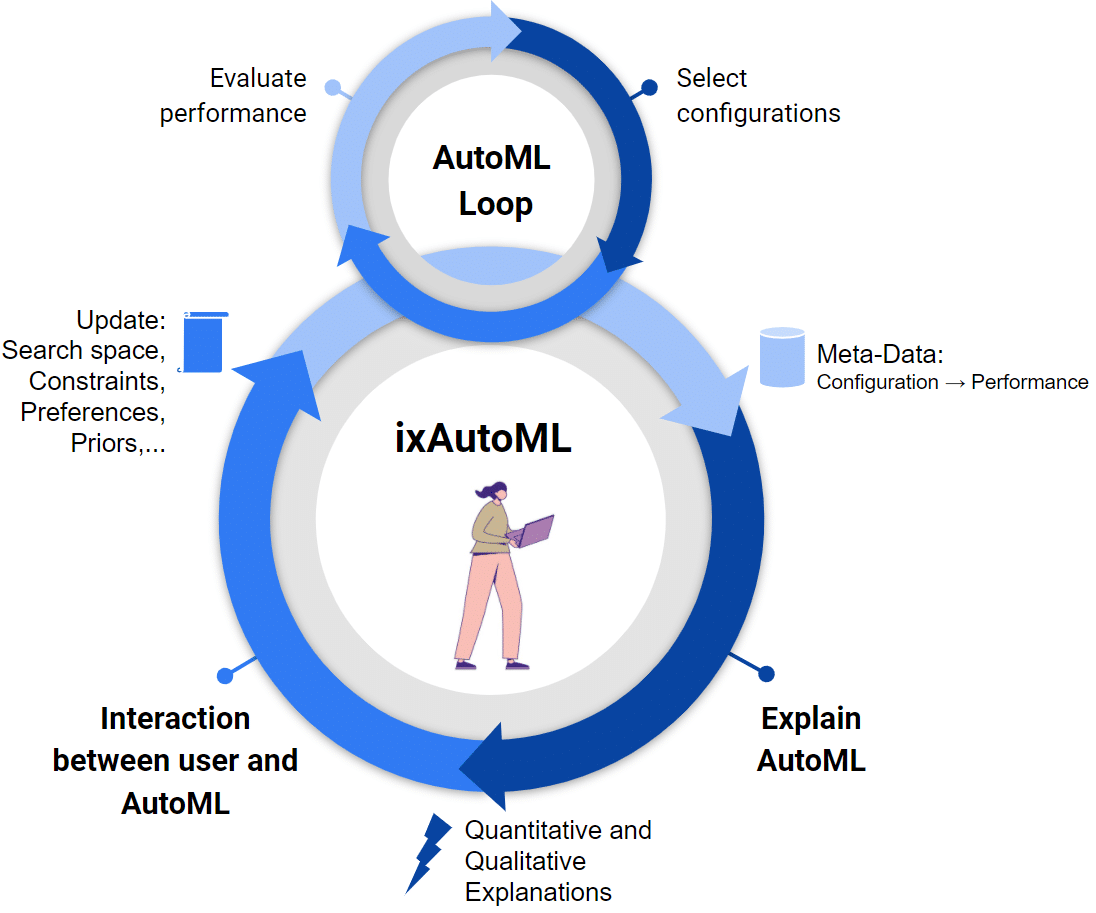

Vertrauen und Interaktivität sind Schlüsselfaktoren für die künftige Entwicklung und Nutzung des automatisierten maschinellen Lernens (AutoML). Sie unterstützen Entwickler und Forscher bei Design leistungsfähiger aufgabenspezifischer Pipelines für maschinelles Lernen, einschließlich der Vorverarbeitung, prädiktiver Algorithmen, ihrer Hyperparameter und - falls zutreffend - des Architekturdesigns tiefer neuronaler Netze. Obwohl AutoML bereit für seine Primetime ist, ist die Demokratisierung des maschinellen Lernens durch AutoML noch nicht erreicht. Im Gegensatz zu den bisher rein automatisierungszentrierten Ansätzen steht bei ixAutoML in mehreren Stufen der Mensch im Mittelpunkt. Die Grundlage für eine vertrauensvolle Nutzung von AutoML beruht auf der Erklärung der Ergebnisse und Prozesse. Zu diesem Zweck streben wir Folgendes an: (i) Die Erklärung der statischen Auswirkungen

European Research Council – ERC Starting Grant

Kontakt

Projektkoordinator und Projektleiter